Since 2015, Be My Eyes has worked to connect their 6.3 million volunteers to users with visual impairments to help with everyday tasks. Their new Virtual Volunteer tool, currently in beta testing, will push them “further toward achieving their goal to improve accessibility, usability, and access to information globally…”

The new Virtual Volunteer technology will be “transformative” in providing individuals who are blind or visually impaired with powerful new resources to “better navigate physical environments, address everyday needs, and gain more independence.”

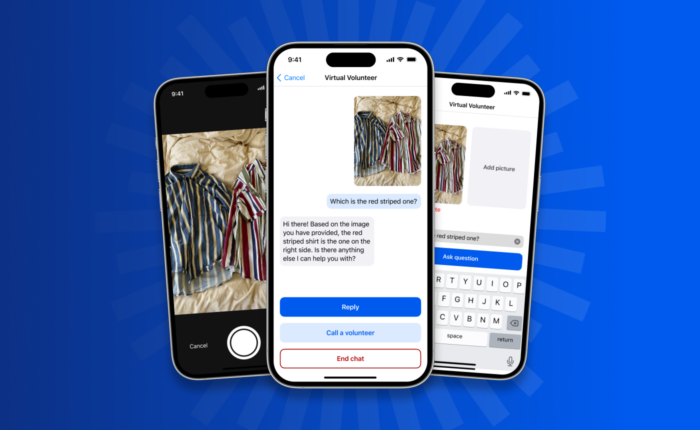

The Virtual Volunteer feature will be integrated into the existing app. It is powered by OpenAI’s new GPT-4 language model, which contains a dynamic new image-to-text generator.

Here’s more from the app’s website:

“What sets the Virtual Volunteer tool apart from other image-to-text technology available today is context, with a deeper level of understanding and conversational ability not yet seen in the digital assistant field. For example, if a user sends a picture of the inside of their refrigerator, the Virtual Volunteer will not only be able to correctly identify what’s in it, but also extrapolate and analyze what can be prepared with those ingredients. The tool can also then offer a number of recipes for those ingredients and send a step-by-step guide on how to make them.”

If the tool is unable to answer a question, it’ll automatically offer users the option to be connected via the app to a sighted volunteer for assistance.

Overview of Virtual Volunteer feature:

- Currently in a closed beta and is being tested for feedback among a small subset of their users

- The group of beta testers will be expanding over the next few weeks and they hope to make Virtual Volunteer broadly available in the coming months

- Just like the existing volunteer service, this tool is free for all blind and low-vision community members using the Be My Eyes app

Click here to learn more!

To learn more about the Be My Eyes app, check out our blog here.